Why doesn't data engineering feel like software engineering?

Summary

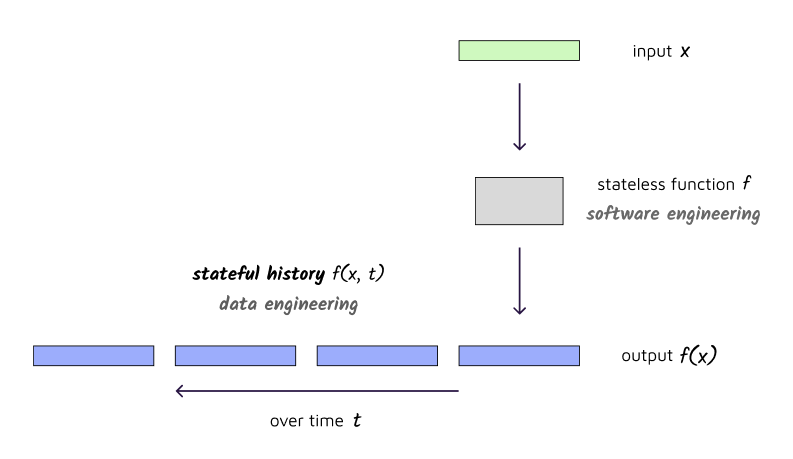

- The role of data infrastructure is to record history and, eventually, interpret it. The role of software infrastructure is to transform and produce history (data)

- Data engineers are fundamentally concerned with how state changes over time. Software engineers are concerned with change at a point in time (input/output)

It is perhaps telling that one of the fastest growing frameworks in data engineering - dbt - counts among its many features the ability to perform data tests, but curiously, not unit tests. Software engineers write unit tests, so why don’t data engineers?

Unit tests evaluate the correctness of our functions. If I pass in a test string to an MD5 hashing function, do I get the correct MD5 hash output? If I pass in a zero to a function which uses an average, do I correctly catch a DivideByZero error, or instead throw an unhandled exception that crashes the program? Unit tests take our inputs and outputs as “given” and assert the correctness of our logic in between.

Data tests, on the other hand, evaluate the correctness of our data. Are we ingesting data with duplicate primary keys? Do we have null data on critical fields? Is today’s sales volume unusually high or low? Is our data stale? Data tests take our logic as “given” and assert the correctness of the input or output data.

When you write code, you are essentially writing functions in a way that resembles pure mathematics. Take some input, apply some logic, produce some output. Take input x, apply function f, and produce output f(x). All software can be thought of as f.

Data, on the other hand, is x and f(x). It is what goes into f and what comes out of f. Data is “state” - the state of the world - that is transformed by some function. When you enter 4 + 3 into an interpreter, you are inputting two pieces of state (the operands 4 and 3) through an addition operator to produce the output 7.

Notice how the addition operator f is ignorant of the world external to its scope. The state of the world - the set of numbers which can be added - lives beyond f. Data lives in some persistent data store, such as a database, file system or physical medium - but not in f.

When you are the engineer writing f, life in some sense is easy. f, after all, is known.

Sure, you might wonder about the algorithmic complexity of f - how it performs in space and time over large amounts of data. You might wonder, given f and other fs, what else can be proved. You might wonder, because f is pure and stateless, how you might parallelize f across many machines. But at the end of the day, f is known, and given some input, we know what the output will be.

In data land, f is not known. x and f(x) are. Consider a file system in the wild. What produced these files? What programs wrote these binaries? The processes which generated this data - or in statistical parlance these “data generating processes” - are not known. We may speculate on what they are, but we cannot know for sure.

A substantial portion of applied statistics revolves around estimating the data generating process f based exclusively on observable data f(x). This contrasts against mathematics where f is defined, and given f, other theorems can be deduced. Statistics is inductive while mathematics is deductive. Data and code are no different.

When business people ask data engineers why a certain number went up or down, it is not the same as asking a software engineer why a certain number went up or down. Inferring the “why” from data alone is fundamentally an inductive process. Data engineers may explain what records changed, but the explanation for why they changed lives beyond the data. It must be hypothesized and inferred, often by way of statistics.

Software, on the other hand, is deductive. If a number outputted by a function is higher than expected, we must merely inspect the inputs and follow the logic of the function through. In software, f is known.

This difference between data and code permeates throughout all aspects of data and software engineering. Data engineers think of the data as “real” and the code as unknown. Data is history, and history can never be deleted, despite how unmanageable it can become over time. Software engineers think of the code as “real” - stateless applications which can be spun up or down at will - with comparatively less regard for the “state” that will be recorded for all of history.

Of course there is overlap between the two. Data engineers transform data, and software engineers manage state, but the longevity and granularity of state managed is typically what differentiates them.

For data engineers, everything becomes history, and history never goes away. For software engineers, state may be externalized to a config.yml, which itself may be versioned over time, but only for as long as prior versions are supported - generally not all time.

This explains why data practitioners and software engineers are concerned at a technical level about fundamentally different things.

Data engineers think about data volume, data durability, relational modeling, query access patterns, metadata, schema changes, bitemporal modeling, aggregation, and full refreshing data. Data analysts and statisticians use this data to infer patterns; namely, the data generating processes that produced such data. It is in this way that we learn from history.

Software engineers think less about the output data which lives forever, and instead about the input data which goes into a particular function or application. They think about code organization (e.g. OOP, public interfaces), static typing, serialization, containerization, parallel processing, algorithm development and algorithmic complexity. For software engineers, outputs over time are not as important as outputs at any given time.

The dividing line between software engineers and data engineers can then be defined, however lightly, by how the two view history. Software engineers are concerned with how history is made, and data engineers with how it is recorded and understood.